How do you make sure that you are sending the best performing messages to your audience? Is it really possible to know what is the most engaging and effective content for you to send to your contact list and ensuring succes with your Email Marketing? How can you know what “the best” actually looks like?

You test. More than that, you Split Test.

What is a Split Test?

A Split Test is the single most effective way of really getting to know what style and content of communication your audience responds to best.

Used correctly, it is an automated way of making sure that your audience always receives the best performing email campaign as possible – in other words, making sure you deliver what the audience actually wants to see from you.

Eliminate all the guesswork

Using Split Testing you are able to test a number of different versions of your Email Marketing campaign, with variations that can be as subtle or significant as you would like. These variations can include such things as the content itself (text, images etc.), the subject line, and the “from” name of your campaign.

These variations are sent to a small portion of your contact list (the size of which you decide), and the best performing campaign is determined according to the winning criteria you select, with this winning campaign in turn being sent out to the remainder of your contact list.

You can choose the send-out time for the split-test and either have the split-test function send out the campaign when the winner has been found, or you can choose to set a specific date and time for the winning campaign to be sent.

How does Split Testing work in MarketPlatform?

The MarketingPlatform Split Testing functionality (and rationale) for testing your Email Marketing campaigns or Marketing Automation flows breaks down as follows:

- What can you test?

- Email Content

- Subject Line

- From Name

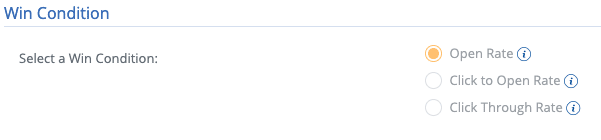

- What criteria can determine the winner?

- Open Rate

- Click-to-open-rate

- Click-through-rate

- How big should the test pools be?

- When should the tests and the winner be sent?

Overall, there are no restrictions on the number of splits you can use in your test, and by that we mean there is no limit to how many different campaigns are sent before a winner is established. However, common practice sees the best email marketing practitioners sending 2-4 different test campaigns according to need.

As mentioned earlier, everything from the “from name”, through the subject line of the campaign, to larger parts of the actual content of the mail can be tested.

In terms of how much of your contact list should be tested, this will be determined by how many splits you are testing. But, you will want the test sample to be statistically significant, whilst not constituting too much of your total contact list.

A good rule of thumb is to use 5% of the list for each variation. So, a test with three splits will see 15% participating in the split test with the winning campaign being rolled out to the remaining 85%.

Of course, MarketingPlatform can automatically discern the winner from the split test (based on your chosen parameters), and send the winning campaign to the remaining recipients.

The basics of setting up a split test

Before anything else, the campaigns that are to be tested need to be created and this is done in exactly the same way as you would with a regular campaign.

The next step is to select the split test feature from the menu and then the individual campaigns you wish to test should be selected in the overview that then appears. To run a split test, at least 2 campaigns must be selected and theoretically speaking there is no upper limit to the number of campaigns in a test. However, best practice indicates that you should ideally test no more than 4 campaigns.

As with a standard campaign send out, the sender, time, and optionally, the tracking code is selected for Google Analytics. In addition to these, however, the different split options must also be defined. It is worth noting that the time of send-out can be individually defined for each campaign in the test. And this then means that the split test can be performed with an additional parameter, namely the significance of the campaign send-out time on the result.

Testing content differences

Why would you want to test and compare the content of a mail? Well, it is worth testing this if for instance, you are in doubt as to whether your Christmas Campaign should lead with an image of Santa Claus, a Snowman or Elsa from Frozen. Similarly, you might want to see if the placement of your content affects the responses, engagement and click-throughs you see from your campaign – does having certain products in certain positions, or different headlines, CTAs or button placements or any number of other variations inside the email content itself have a major impact on the effectiveness of your campaign?

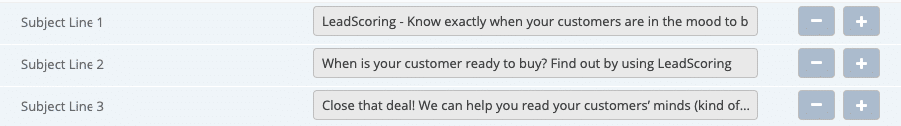

Why test the Subject Line?

The Subject Line is one of the absolute key elements in getting a contact to open an email campaign. In our article 5 Email Marketing Strategies That Will Get You Seen And Heard we dive into this in a little more detail, but as a standard practice, it is always worth testing to see what sort of subject line appears to be particularly catching the audience’s eye – and don’t be afraid of experimenting and getting a little “wild” here, the result you end up with could well provide quite the surprise.

Some things to bear in mind when running Split Tests

What do Open Rate, Click to Open Rate, and Click-Through Rate actually mean?

- Open Rate: Measures the total number of your contacts that have opened the email Campaign. This is a great tool for testing the From Name, Subject Line and Send Time of your email campaign.

- Click to Open Rate: Measured as a percentage based on how many of those who opened your campaign then clicked on a link in the campaign.

- Click-Through Rate: This measures how many of those who opened the email also clicked on a link in the email based on the total number of recipients. This is a great all-round way to test the effectiveness of your email campaign.

So Open-Rate (OR) measures the effectiveness of the factors BEFORE the contact has read the email such as subject line, from name, and send-time etc.

Click to Open Rate (CTOR) measures how many people clicked on a link, but only looks at how many of those who opened the email and subsequently clicked on a link. In contrast, CTR includes everyone who received the email.

Click-Through-Rate (CTR) measures the effectiveness of the content itself. How many received the email, saw and read the content and subsequently clicked on a link in the email.

Avoid the CTR trap

Whilst it would be easy to just select CTR since it is the “alpha-omega number”, it is, in fact, impossible to know whether a good or bad CTR is because of the nature of the content, the subject line, the campaign send time, the from name, or the weather, traffic or, indeed, whether or not the contact had cornflakes for breakfast that day.

That is why open-rate and click-to-open-rate have validity as success criteria options along with CTR, as they are best for learning more fully about your contacts and how they are responding to your communications.

The significance of the broadcast time for the result

For many senders, the timing of a campaign send-out is of great significance to the engagement you see in your campaigns. You might want to test for instance if sending your email campaign (with the same content) out at different times of day affects the Opening Rate. Do more people open your mails first thing in the morning, or after lunch? If time of day is the criteria you are testing, make sure that nothing else changes in your Split Test campaign, as this may skew your results.

Once you have established which time of day works best for you, you can then Split Test on other criteria.

Our recommendations for Split Testing

- Test everything. It can be extremely hard to predict how your audience will react to your wording and content and what it is exactly what triggers their interest.

- For the most accurate results, Split Test 2-4 different campaigns, and make sure that you are comparing apples with apples in the test. Only test 1 variable at a time to make sure you can completely rely on the results. If you test more than 1 element, the test will not provide 100% clarity on what it was that actually made the campaign perform better or worse. For example, consider using the Opening Rate as a split score on informational emails, and if you are pushing to make sales from your newsletter, using a split score on Click Through Rate is likely to be a better parameter to deciding a winner.

- Try different weekdays if your sending frequency allows. Experiment with the time of the split as well. For example, try to split with four identical campaigns that are broadcast on 2 different weekdays and different times of the day.

- Plan ahead. Remember if you want to send out a campaign at a certain day and time, that the campaign needs time for the split test results to come in. Make sure to set up and release the split test at least 1 day before the winning campaign should be sent out.

See how the Split Test function works

Are you intersted in learning more about using split tests and how it works in MarketingPlatform, please don’t hesitate to reach out to one of our great colleagues by either booking a demo or by signing up for a free trial.

Try MarketingPlatform for free for 14 days

The trial period is free and expires after 14 days if you do not wish to continue.

When you sign up, you also receive our educational flow on a series of emails – and our newsletter.